Malaysian SFT

Collection

SFT using LoRA and DoRA including reasoning.

•

11 items

•

Updated

LoRA SFT openai/gpt-oss-20b on initial mesolitica/Malaysian-Reasoning

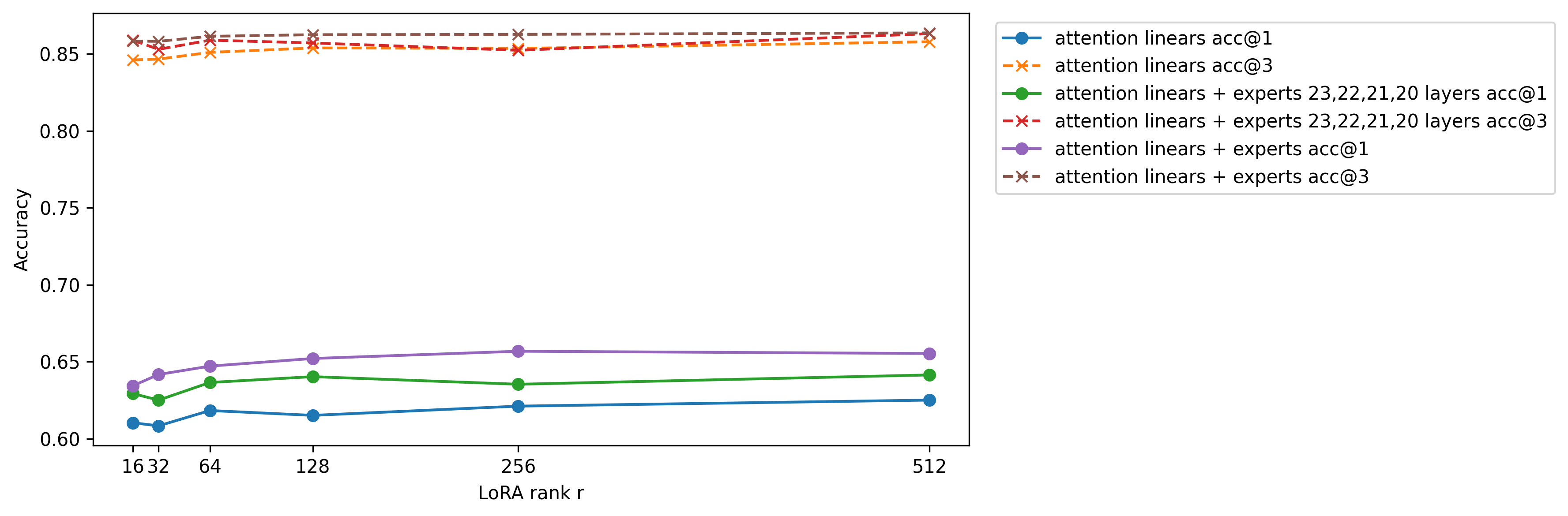

kernels-community/vllm-flash-attn3 for Flash Attention 3 with Sink.exp_avg_sq, top 4 selected layers are 3, 2, 18, and 1. + + with the rank of each equal to the total rank divided by the number of active experts, https://thinkingmachines.ai/blog/lora/

This model repository we only upload the best, only attention linear layers with rank 256 alpha 512.

Source code at https://github.com/Scicom-AI-Enterprise-Organization/small-ablation/blob/main/malaysian-reasoning

Special thanks to https://www.scitix.ai/ for H100 Node!